Teach a Robot to FISH: Versatile Imitation from One Minute of Demonstrations

* Equal contribution

Abstract

While imitation learning provides us with an efficient toolkit to train robots, learning skills that are robust to environment variations remains a significant challenge. Current approaches address this challenge by relying either on large amounts of demonstrations that span environment variations or on handcrafted reward functions that require state estimates. Both directions are not scalable to fast imitation. In this work, we present Fast Imitation of Skills from Humans (FISH), a new imitation learning approach that can learn robust visual skills with less than a minute of human demonstrations. Given a weak base-policy trained by offline imitation of demonstrations, FISH computes rewards that correspond to the “match” between the robot’s behavior and the demonstrations. These rewards are then used to adaptively update a residual policy that adds on to the base-policy. Across all tasks, FISH requires at most twenty minutes of interactive learning to imitate demonstrations on object configurations that were not seen in the demonstrations. Importantly, FISH is constructed to be versatile, which allows it to be used across robot morphologies (e.g. xArm, Allegro, Stretch) and camera configurations (e.g. third-person, eye-in-hand). Our experimental evaluations on 9 different tasks show that FISH achieves an average success rate of 93%, which is around 3.8× higher than prior state-of-the-art methods.

Fast Imitation of Skills from Humans (FISH)

Method

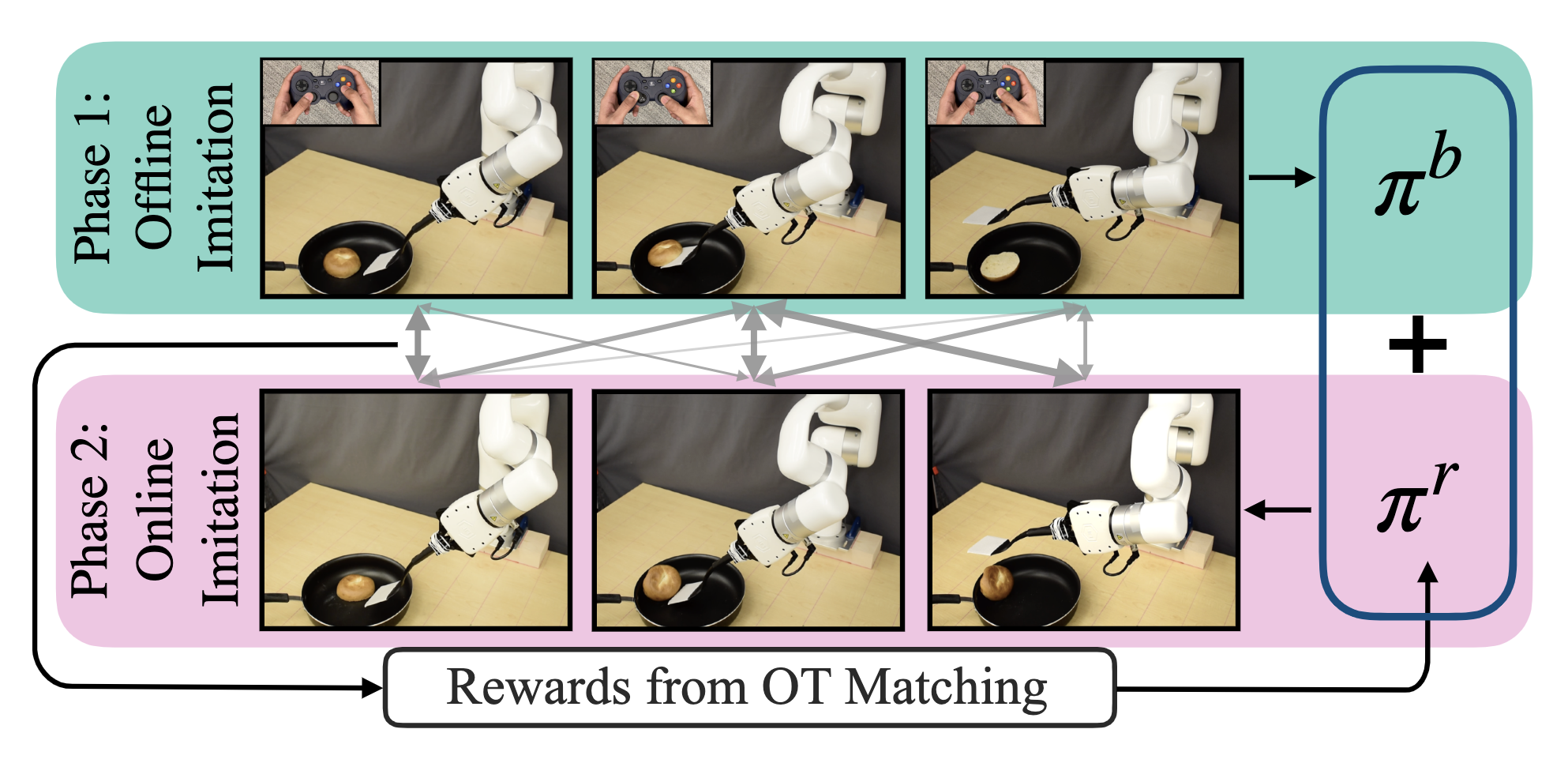

FISH operates in two phases. The first phase obtains a non-parametric base policy through offline imitation from demonstrations. In the second phase, corrective residuals over this base policy are learned through inverse RL using optimal transport (OT) based trajectory-matching between the expert and agent behavior.

Robot Results

We evaluate FISH on 9 tasks of varying difficulty across 3 different robot morphologies - a robot hand, a robotic arm, and a mobile robot. We observe an average success rate of 93%, which is 3.8x higher than prior state-of-the-art methods in visual imitation and IRL.

Generalization to New Object Configurations

FISH exhibits generalization to new object positions and robot initializations unseen in the expert demonstrations. In the leftmost column, the region of operation for each task is denoted by the blue box, × on the images indicate positions where the demonstrations are collected, the green marks indicate positions where FISH succeeds and the red ones indicate failure modes.

Generalization to New Objects

Interestingly, FISH also exhibits generalization to new objects in the form of different bills and cards for the picking-up task and different types of bread for the flipping task. However, it fails on extreme variations in the visual and dynamic properties of the object.

Training Runs

We provide videos displaying the progression of learning during the residual learning phase in FISH.

Bibtex

@article{haldar2023teach,

title={Teach a Robot to FISH: Versatile Imitation from One Minute of Demonstrations},

author={Haldar, Siddhant and Pari, Jyothish and Rai, Anant and Pinto, Lerrel},

journal={arXiv preprint arXiv:2303.01497},

year={2023}

}